Today’s guest blog is written by Max M. Houck, Ph.D., FRSC, Global Forensic & Justice Center, Florida International University. Reposted from the ISHI Report with permission.

With all the Sturm und drang regarding how artificial intelligence (AI) will transform/elevate/destroy nearly every sector of professional and personal life, it seems it would be a good time to take a look at one area where AI is likely to be applied sooner and have an outsized effect: Forensic science. Whether that effect is good or bad depends on, like every other potential AI application, on how it was written and what data were used to train it. The outsized part comes from the power of forensic methods and technology to identify the guilty, misidentify the innocent, and exonerate those wrongly accused. That’s typical for forensic methods, like fingerprints or DNA, but with AI, the reach, speed, and breadth of the software exaggerate forensic science’s already large footprint on civil liberties and criminal justice.

Will the future be a Star Trek-like AI utopia (“Computer, analyze this DNA sample.”) or a death-by-robots apocalypse (“I’ll be back.”)? Neither, most likely (Figure 1). But AI has already begun to transform how we work and live; inevitably, it will have an effect on forensic science. This article offers a short explanation of AI, its significant historical events, and how it might affect forensic science and its related fields.

Figure 1

What is AI and how does it work?

Machine learning (ML) uses algorithms to create systems that learn from data and make decisions with minimal human intervention. Machine learning algorithms are trained on datasets to develop self-learning models capable of identifying patterns, predicting outcomes, and classifying information. These models can also adapt to new data and experiences, improving over time. AI algorithms are taught through supervised, unsupervised, and reinforcement learning. The main difference between supervised and unsupervised learning in machine learning lies in the type of data used. Supervised learning uses labeled training data to teach algorithms to recognize patterns and predict outcomes. The labeled data includes the desired output values, providing the model with a baseline understanding of the correct answers. The algorithm trains itself by making predictions and adjusting to minimize error. Although supervised learning models are often more accurate than unsupervised ones, they require human intervention to label the data. For example, a supervised model could be trained to predict a house’s price based on data from thousands of other houses. Unsupervised learning uses unlabeled data to teach models to discover patterns and insights without human supervision or guidance. These models can identify trends in a dataset by clustering similar data points, detecting outliers, or reducing the dimensionality of the data. For instance, unsupervised learning could be used to identify the target market for a new product when there’s no historical information about the target customer. ChatGPT is a type of generative AI that uses unsupervised learning with large amounts of data, rather than learning in a formal way. It learns by seeing examples of what it’s intended to generate, such as human-like text based on context and past conversations. Thus, supervised learning maps labeled data to known outputs, while unsupervised learning explores patterns and predicts the output. Reinforcement learning (RL), however, is where the AI is rewarded for good responses but not for bad ones. The agent learns to choose responses that are classified as “good” and to avoid those deemed “bad.” RL is a bottom-up approach that learns by trial and error; it has been used in training algorithms to play chess and Go, for example.

Like any computer function, AI is only as good as the data it is trained on. Skewed data sets will result in similarly skewed outcomes. AI is based on the training and decision algorithms it’s been told to use. Bias can be built into AI; therefore, it can be avoided. Balance, ethics, and transparency will be key elements in all AI applications and especially for any applied to forensic science.

Timeline of AI achievements

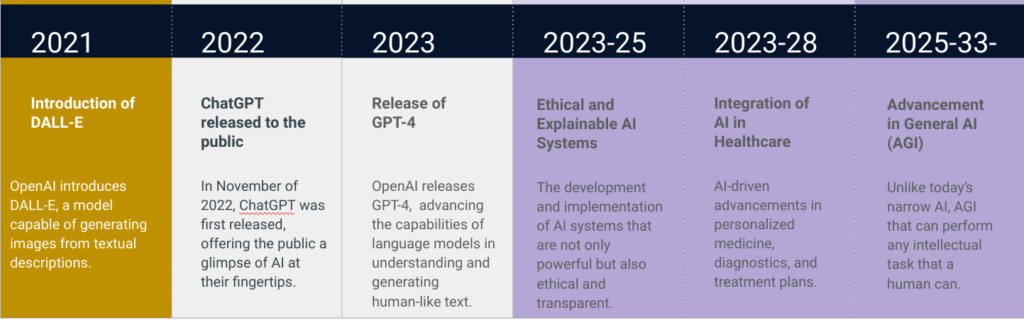

Although it feels as if AI is new, the topic and its adjacent disciplines have been around for nearly three-quarters of a century. What follows is a brief timeline of AI achievements that have shaped and driven it in research and our daily lives (Figures 2 and 3).

Figure 2

1950

The Turing Test (originally called the imitation game) was developed to evaluate a machine’s ability to demonstrate human-level intelligent behavior indistinguishable from a real human being. Turing proposed a test in which a human evaluator would judge conversations between a human and a machine designed to generate human-like responses. The evaluator would know that one participant is a machine, but both participants would be separated and communicate through a text-only channel, such as a keyboard and screen, to ensure the result is not influenced by the machine’s speech capabilities. If the evaluator could not reliably distinguish between the human and the machine, the machine would be considered to have passed the test. The evaluation would focus solely on how closely the machine’s answers resembled those of a human, rather than the accuracy of the answers. Since Turing introduced the test, it has been both highly influential (it is one of the most cited papers in computing) and widely criticized by those that argue a machine cannot have a “mind” or “consciousness” and therefore cannot “understand” like humans do. It remains an important concept in the philosophy of artificial intelligence.

1957

In 1957, Cornell University psychologist Frank Rosenblatt invented the first trainable neural network, the Perceptron, which was inspired by the biological behavior of neurons. The Perceptron is a single-layer artificial neuron that classifies input into two categories. It takes multiple weighted inputs, sums them, adds a bias, and then uses an activation function to predict the class of the input as either 1 or 0. The Perceptron’s design is similar to modern neural nets, but it only has one layer with adjustable weights and thresholds, sandwiched between input and output layers. Rosenblatt also introduced the term “back-propagating errors” in 1962, which became a critical concept for future AI work.

1966

ELIZA is an early natural language processing program developed by Joseph Weizenbaum at MIT. Designed to investigate human-machine communication, ELIZA mimicked conversation through pattern matching and substitution techniques, creating the illusion of understanding without truly comprehending the content of the conversation. The most well-known version emulated a Rogerian psychotherapist, reflecting users’ words back to them using predefined rules to generate non-directive questions. As one of the first chatterbots (what we would now call a chatbot), ELIZA was also among the initial programs capable of attempting, but failing, the Turing test.

1997

IBM’s Deep Blue defeated reigning world chess champion Garry Kasparov in a 1997 rematch in New York City. This marked the first time a computer had beaten a world champion under tournament conditions. The victory is considered a historic moment in artificial intelligence, highlighting the potential of computational systems.

Figure 3

2011

Apple introduces Siri, an intelligent personal assistant and knowledge navigator for its family of computer products. It uses voice, gestures, and a natural-language interface to answer questions, make recommendations, and perform actions. It adapts to the users’ language use, searches, and preferences, returning more individualized results over time. Siri is important in that it had a major impact on placing AI in the average person’s hands, setting the stage for AI being ubiquitous in computing and internet activities.

2016

Google’s AlphaGo defeated champion Lee Sedol in a five game Go match, showing the capabilities of deep learning and reinforcement learning. AlphaGo won all but the fourth game. Go is a complex board game that requires intuition, creative and strategic thinking; it is considered to be the oldest board game continuously played to the present. It presents a difficult challenge in the field of AI and is considerably more difficult to solve than chess: While chess has between 10111 and 10123 positions, Go has around 10700. AlphaGo is distinctly different from other AI efforts. AlphaGo uses neural networks (more complex versions of Weizenbaum’s Perceptron) to estimate its probability of winning. AlphaGo uses an entire online library of Go moves, strategies, players, and literature. Once setup, AlphaGo runs by itself, without any help from the development team, and evaluates the best way to winning a particular Go match. AlphaGo’s victory was a huge event in AI research and considered the end of the era of using board games as a platform to develop AI capabilities.

2021

DALL·E is announced and made available to the public. DALL-E and its iterations are text-to-image models developed by OpenAI using deep learning to generate digital images from natural language prompts and descriptions. For example, Figure 4 is the result of “create a photo-realistic image of a female scientist working on DNA in a laboratory” (Figure 4).

Figure 4

DALL-E (now up to version 3) raised numerous ethical issues about intellectual property, creativity, and creating Deepfakes (media digitally manipulated to replace one person’s likeness convincingly with that of another, like a celebrity in a commercial that they never appeared in).

2022, 2023

In November of 2022, ChatGPT was first released, offering everyone AI at their fingertips. ChatGPT is an artificial intelligence (AI) chatbot that can understand and generate human-like language. It uses machine learning algorithms to analyze large amounts of data and respond to user questions in a variety of formats. ChatGPT can also compose written content, such as articles, essays, social media posts, emails, and code. ChatGPT was developed by OpenAI, the same company that created DALL-E. ChatGPT seems to provide a more engaging experience than traditional search engines but it has limitations, including generating text that looks correct but is actually meaningless or wrong (“hallucinations”), failing to verify information, and failing to cite sources. Despite these limitations, ChatGPT revolutionized the field of AI and the public’s access to it. ChatGPT-4, the most current version to date, advanced the capabilities of language models in understanding and generating human-like text.

The near-future of AI

(AI) is expected to become more prevalent as technology advances, and some predict it will revolutionize many sectors. Smart products, military and cybersecurity, transportation, education, resource management at big data centers, and personalized treatment plans and diagnostics in medicine, will all see AI integration very soon.

Use of AI in forensic science

First of all, it’s important to remember that AI applications to improve forensic analysis are largely in the conceptual or R&D phase. Federal organizations, like the National Institute of Standards and Technology (NIST)[1] and the National Artificial Intelligence Initiative[2] are working on awareness, strategic investment, and ethical transparency in the implementation of AI. The NIST AI effort intends to cultivate trust in the design, development, use and governance of AI in ways that increase their safety and improve security. On October 30, 2023, President Biden signed an Executive Order (EO) to build U.S. capacity to evaluate and mitigate the risks of AI systems to ensure safety, security and trust, while promoting an innovative, competitive AI ecosystem that supports workers and protects consumers.

[1] https://www.nist.gov/artificial-intelligence

[2] https://ai.gov/

Although some work has been done, largely in the digital media arena, the majority of forensic science has yet to have AI projects implemented. Current AI applications include expert systems, machine learning systems, or hybrids of the two, typically using supervised learning. These applications could enhance human experts’ performance, overcoming limitations of traditional methods, including bias (cognitive and statistical), managing large data sets, detecting patterns, and characterizing evidence, among others. Policy issues loom large, however, and, as with all technological innovations, the public are playing catch-up, with lawmakers running a distant third.

Applying AI tools in forensic sciences to collect new information from massive datasets to enhance knowledge and reduce human subjectivity and errors provides a greater scientific basis that could favor the admissibility of expert evidence. Furthermore, the cognitive capabilities of human skills modeled using computational methods offer several new possibilities for forensic science, providing effective tools to reinforce the scientific method, to improve professional competencies of the forensic examiner and to provide an alternative opinion on a case. For these reasons, it is essential to refect on whether the implications of AI are really going to replace, diversify or complement and expand previous well-known solutions to forensic problems.

Many forensic methods employ the subjective evaluation of objective criteria. AI could be used to help identify, evaluate, and quantify the similarity between two items, perhaps with an eye to determining a random match frequency. Exploratory research has been conducted on a number of forensic domains, like firearms and shoe prints, among others. AI offers a promising opportunity to assist analysts in interpreting complex DNA analyses. Several studies have explored using classification methods to enhance current probabilistic genotyping algorithms. They use a dataset of known mixed samples to determine the number of contributors in unknown samples. Investigative genetic genealogy, a growing field, also stands to benefit significantly from AI/ML techniques. This field involves generating and analyzing SNP data to uncover genealogical relationships.

The impetus for much of this visual analysis is the widening use of facial recognition in law enforcement and security situations. Facial recognition, while accepted by many, has significant problems that are illustrative of the problems AI will have to address. Faces “scraped” from the internet, without balancing bias, quality, or privacy, fuel some facial recognition software. If the algorithm is trained on more white and male faces, it will perform poorly on faces that differ from those criteria. A 2019 National Institute of Standards and Technology (NIST) study showed that many facial recognition systems falsely identified Black and Asian faces between 10 and 100 times more often than White ones. Issues like this point to the necessity for openness and transparency about how AI methods are developed, trained, and operate.

From black boxes to glass boxes

It is not possible to assess whether AI provides benefits without knowing how it works, how well it works, and how it is used. AI tools often operate as black boxes, making it challenging to understand how they generate specific results. Unlike traditional scientific methods that test causal relationships, these tools infer patterns and correlations from data, sometimes leading to spurious results. Biases can also stem from limited or biased datasets, questioning the reliability of these tools. Additionally, vendors often withhold source code from courts, citing it as proprietary or a trade secret. For instance, in the 2021 New Jersey State vs. Pickett case, access to TrueAllele’s source code was denied on these grounds. The City of New York pursued legislation about automated decision systems used by its agencies after the Office of the Chief Medical Examiner was found to be using unvalidated software in DNA analysis. The algorithm’s source code was a secret for years until a federal judge granted a motion to lift a protective order on it. The use of AI-derived information raises legal and ethical concerns, especially regarding civil liberties and adherence to evidence rules. Would a computer expert be required to explain how an AI tool works before the forensic expert could testify to the results? Would the jury understand? Courts recognize the challenge of demanding full explainability from AI tools, balancing constitutional rights like due process and confrontation clauses. Despite meeting criteria for admissibility, AI tools’ effectiveness and reliability remain topics of forensic community discussion, impacting their use in legal contexts. What we should strive for is a “glass box” AI, one designed to be interpretable, able to be understood by laypersons, who can see how the AI works and what information it relies upon for its calculations and what those calculations are. Transparency will be key, going forward, for any forensic AI applications.

Challenges

Implementation of AI systems in forensic science face three main challenges. The first is the problem of training and input data. Like any other field, human error is a possibility in forensic analysis. Mistakes can occur during evidence collection, preservation, handling, or analysis, potentially compromising the integrity and reliability of the evidence. Factors such as inadequate training of both humans and algorithms, lack of resources, or pressures to produce results can contribute to human errors. The training data for an algorithm can have several issues: it might be skewed, incomplete, outdated, disproportionate, or contain historical biases. These problems can negatively impact algorithm training and perpetuate the biases in the data. Additionally, the algorithm’s implementation can also introduce biases or flaws. These could arise from statistical biases, moral or legal considerations, poor design, or data processing steps like parameter regularization or smoothing. Existing databases are known to be demographically skewed, for example, in the Combined DNA Index System (CODIS), DNA profiles from Black persons are collected at two to three times that of White persons. Any forensic AI algorithms need to be trained on unbiased data sets; the composition of the data needs to be transparent.

The second main challenge is validation. Some forensic techniques and methods lack sufficient scientific validation. The validation process involves rigorous testing and research to establish the accuracy and reliability of a technique. Without proper validation, the evidentiary value of these techniques becomes questionable, potentially leading to erroneous interpretations and wrongful convictions. In some forensic disciplines, there is a lack of standardized protocols and methodologies for analyzing and interpreting evidence; where there are standards, there is a lack of adoption for a variety of reasons. This absence of uniformity can lead to inconsistencies in the conclusions reached by different experts examining the same evidence. The absence of clear standards can also make it difficult to evaluate the reliability of the findings or to reproduce them. Moreover, AI is so new and moving so fast, standardization is non-existent.

Validation of AI algorithms is crucial to ensure their accuracy, reliability, and fairness. This process involves several key steps. First, data is split into training, validation, and test sets to develop and evaluate the model. Cross-validation techniques help in tuning the model and preventing overfitting. Performance metrics like accuracy, precision, recall, and mean squared error are used to assess the model’s performance. Bias and fairness testing are also essential, involving checks for biases related to sensitive attributes and using fairness metrics to ensure equitable outcomes. Robustness testing, including adversarial and out-of-distribution testing, evaluates how well the model handles unexpected or adversarial data. Validation also covers data processing steps, ensuring correct feature engineering, normalization, and standardization. Ethical and legal compliance is critical, with a focus on regulations like General Data Protection Regulation (GDPR) and addressing ethical concerns. Continuous monitoring and re-validation are necessary to detect model drift and maintain performance over time. User feedback is incorporated to identify issues and iteratively improve the algorithm. This comprehensive validation approach ensures AI systems are reliable, fair, and effective.

The third and final challenge is with interpretation and transparency. Forensic evidence often requires subjective interpretation by forensic experts. The subjective nature of interpretation can introduce personal biases, both conscious and unconscious, that can affect the conclusions drawn from the evidence. These biases can arise from various factors such as confirmation bias, contextual bias, or even cognitive limitations of the experts. Forensic science can be complex and technical, and the language used by forensic experts may be difficult for non-experts, including jurors and judges, to understand. Miscommunication or misinterpretation can occur when complex scientific concepts are not effectively explained, leading to misunderstandings or incorrect conclusions. Explaining forensic AI applications to a jury presents significant issues.

Addressing these problems requires ongoing efforts to improve the scientific foundation of forensic disciplines, enhance standardization, minimize bias, provide comprehensive training, and promote transparency in the interpretation and presentation of forensic evidence.

How could AI improve the interpretation of forensic evidence?

AI has the potential to significantly enhance the interpretation of forensic evidence in several ways, addressing many of the challenges and problems associated with traditional forensic methods. AI systems can follow standardized and consistent procedures for evidence analysis. They don’t suffer from issues like fatigue, stress, or bias that can affect human experts. This can help ensure that evidence is interpreted consistently across different cases. AI can analyze and recognize complex patterns in forensic data more effectively and quickly than humans. This can be particularly useful in tasks such as fingerprint and DNA analysis, where large datasets need to be compared. By automating routine and repetitive tasks, AI can help reduce the chances of human error in the collection and analysis of forensic evidence. This can improve the overall quality and reliability of evidence.

AI can process and analyze vast amounts of data, including text, images, and other forms of evidence, at speeds that would be impossible for humans. This can lead to the discovery of previously unnoticed connections or clues in cases. AI systems can cross-reference and integrate various forms of evidence, helping investigators build a more comprehensive picture of a case. For example, they can link physical evidence with digital evidence or correlate different types of data. AI can help assess the risk of potential misinterpretation or bias in forensic evidence. It can flag cases where there might be inconsistencies or where further human review is required, reducing the likelihood of wrongful convictions.

In cases involving multilingual evidence or international investigations, AI can assist in translating and interpreting documents and conversations, making the evidence more accessible to investigators. AI can process evidence more efficiently and at a lower cost than human experts. This can help law enforcement agencies and forensic laboratories handle cases more quickly and cost-effectively. AI systems can continuously learn and improve their performance based on new data and insights. This adaptability is crucial in a field like forensic science, where methods and techniques are constantly evolving. AI can help reduce the backlog of unprocessed evidence, which is a significant problem in many forensic laboratories. By automating routine tasks, AI can speed up the processing of evidence.

Conclusions

It’s important to note that AI in forensics is not without its challenges. The interpretation of forensic evidence is a complex process that often involves subjective judgment, and AI systems can also be vulnerable to bias if not properly designed and trained. Therefore, it’s essential to develop and implement AI systems in a way that is transparent, fair, and accountable.

Additionally, AI should not replace human experts entirely. Instead, it should be used as a complementary tool to assist human forensic experts in their work, making the process more efficient, accurate, and reliable. It should also be subject to rigorous testing, validation, and oversight to ensure its reliability and effectiveness in forensic investigations. The most achievable applications of AI in forensic science are those that can be integrated with existing workflows, have a clear societal benefit, and have been validated for accuracy and reliability. However, it’s crucial to ensure that these technologies are used ethically, transparently, and in compliance with legal and privacy regulations. Additionally, regular validation and quality control are essential to maintain the integrity of forensic processes.